Knowing what I know now, would I use it to learn a new programming language or framework? Nah. Just read the documentation. Would I use it to "improve productivity" or "write less boilerplate"? Also, nah. I don't intend to delegate critical parts of my code to a plausible-text-generator as I'll just spend more time rewriting naive code.

So, what is it any good for?

Let me outline some of the things that I think Copilot is actually good at.

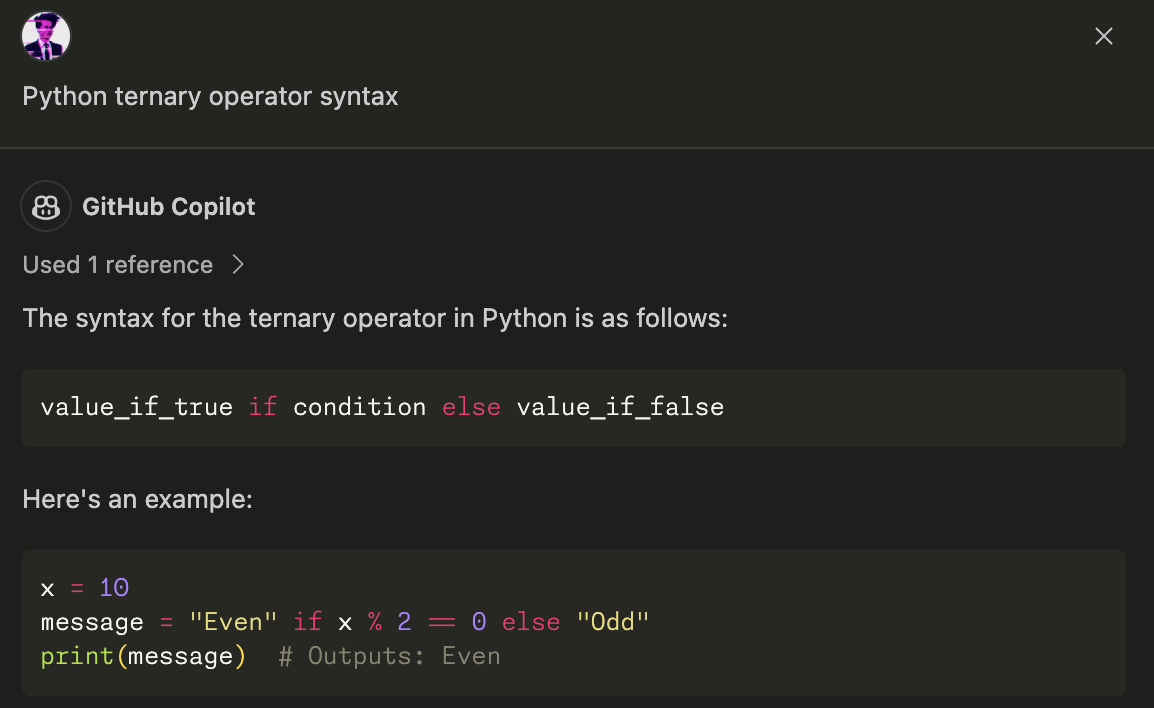

Quickly reminding yourself of forgotten syntax

You know, it's embarrassing how often I forget how to write a ternary conditional in Python. I've looked this up more times than I can count – it just doesn't stick in my dumb flesh brain. Copilot answers this really quickly without needing to context-shift into validating search results.

Summarising some undocumented noodle-code you wrote two years ago.

You wrote that mangled disaster function for a reason. Copilot can break it down and help you make sense of it. It’s pretty damn good at this. You can follow up with specific questions to spin that fork around your spaghetti pretty quickly. Then again, it has no idea about the broader context the function operates within. So you will probably spend some time finagling your queries.

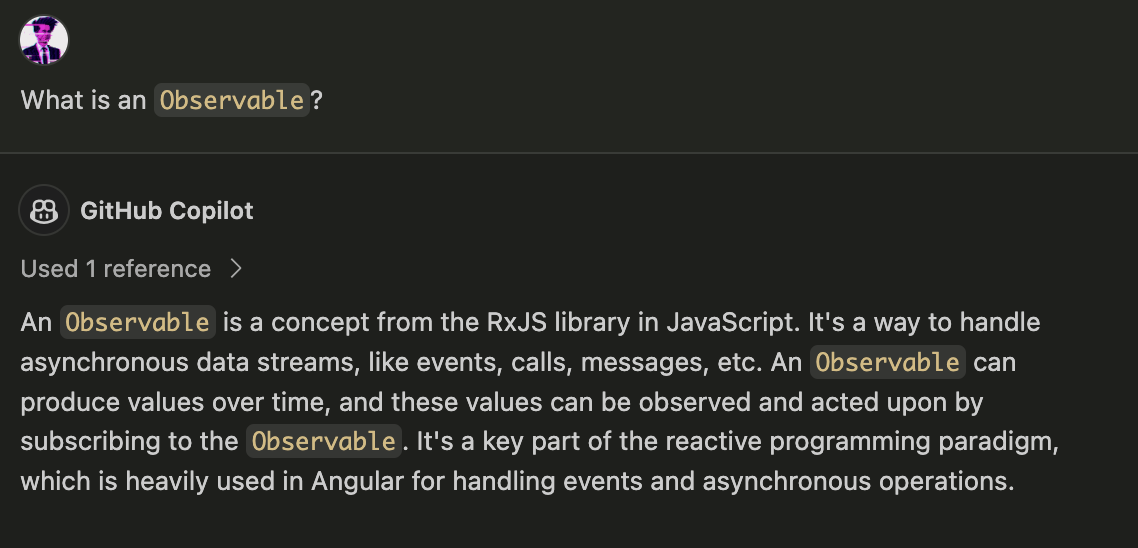

Superficially explaining a new concept.

Some libraries can be pretty opinionated about how they work, and they might not necessarily follow the conventions of the language you are using. I sometimes want help wrapping my head around “the right way” of implementing a particular class or API. Hey, LLMs give you a pretty good start, but follow up with the documentation because it has probably been updated since Copilot was last trained.

Offering theories about what could be wrong in some code.

Copilot can quickly read exceptions and provide explanations. It can look at your code and find the little mistakes that might be throwing everything off. In particular, the type of mistakes that are easy for a human to overlook like incorrect nesting/indentation, or curly brace placement. I think that Copilot can be a good starting point. But, I've also had it lead me down rabbit holes suggesting ridiculous changes to my code when the problem was one of the aforementioned little mistakes. This may improve as GPTs gain the ability to handle more context, but then it seems we may still be inevitably stuck with the hallucinations.

This means being prepared to deal with bogus suggestions.

Writing quick, nasty little scripts

Occasionally, I have to do stuff on Windows (yuck) and there have been a couple of occasions where I used ChatGPT to write a PowerShell (yuck) script where my requirements were simple enough. So there's that!

Okay, but?

The fundamental essence of these chatbots is that the veneer of usefulness is itself the trap. Aren’t all these qualities just ingredients of the stew we call professional growth? Shouldn’t we practice debugging, research, creative thinking, as well as reading and interpreting “noodle code”? These are all important skills for devs. Delegating them to Copilot means handing over your personal growth to a statistical plausibility toilet and hitting flush.

The temptation to have a regurgitative language model spew forth code because it is faster leads to unforgivable programming sins that cast oneself into an unending debugging purgatory. I know this, and I freely confess it, because I've done it and paid the price. How did I end up there? Well, regardless of lacking context and hallucinations, the dopamine spike after watching 60 lines of code materialise in a couple of seconds is thrilling. Sometimes, not often, it even runs without requiring any modifications. This experience is enough to throw caution to the wind and try it one more time. And then again. Before you know it you have entire modules written and apparently functioning. What a productivity boost! Take a holiday, you did your work in half the time.

Gone are all the patient safeguards of a codebase built from the necessarily intellectual process that is programming. By outsourcing your code to a script-puker you won't know how it works in runtime anymore. You won't understand how your data flows, whether it is sanitised appropriately, or if you're hitting your database with an expensive query. At first, this won't matter because you are getting stuff done. It will hit you like a tonne of bricks eventually. You'll realise that your code is poorly commented, it uses deprecated APIs, and is impossible to troubleshoot precisely because it is so plausible. I found myself nuking all of it. That was easier than trying to figure out what nonsense was causing all the problems as the Frankensteinian appendages kept falling off as I was stitching new ones on.

Copilot, lead us not into temptation

Like any vice, the secret may lie in using these vom-bots in moderation. There are also pitfalls to looking up issues in StackOverflow, right? The site even warns about the possibility of spreading security vulnerabilities when copying-and-pasting code from otherwise ostensibly helpful threads. But as that post summarises:

Copying code itself isn’t always a bad thing. Code reuse can promote efficiency in software development; why solve a problem that has already been solved well? But when the developers use example code without trying to understand the implications of it, that’s when problems can arise.

Similarly, the regurgitator bots' utility cannot be denied and the developer must simply act responsibly.

Nonetheless, for my part, I'll be using these LLMs only as a last resort. Why? Let's try this thought experiment.

Imagine that the silicon valley tech bro fantasy is realised. With a simple prompt containing your backlog, the vom-bot will write a fully featured application, packaged just how you want it. It will handle support requests, and deploy updates that are regression tested and sound. The bot will vom all this up within minutes – a task that otherwise may have taken months or years. Actually, this quickly evolves as we witness a Cambrian explosion of software. The market is not flooded with new apps per se, because the very need for an app market is made redundant. Instead, everyday users manifest new software capabilities with mere utterances, interpreted, compiled, and executed ephemerally on device and in the cloud. Maybe this software isn't even the result of a regurgitative algorithm any more – maybe the bots acquire the ability to craft novel solutions.

What is the role of a human programmer then? See also: what is the role of a musician or novelist?

I like to code. I enjoy the exercise at an intellectual level. It is inherently gratifying, and I am rewarded with satisfaction each time I push a new commit. Ultimately, I avoid the vom-bots because they steal away this sense of satisfaction and exchange it for supposed productivity. When the bots rule the world I may have to swap careers. Maybe I'll go into politics where the vom-bots are flesh and blood and in audacity alone could never be supplanted.

Nevertheless, I will continue to code just for myself. I will do this knowing that it will not contribute unnecessary carbon emissions or political disinformation. It will be my little act of rebellion, an unproductive sip of self-expressive joy.